publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2026

2025

- ICCVIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2025

2024

- In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024

2022

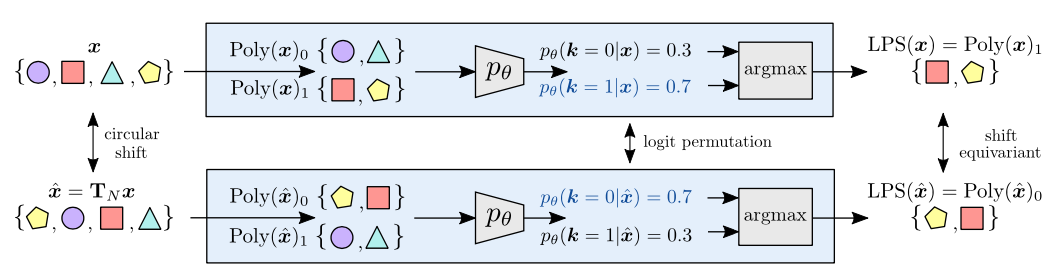

- Advances in Neural Information Processing Systems, 2022

2021

- IEEE Journal of Selected Topics in Signal Processing, 2021

2020

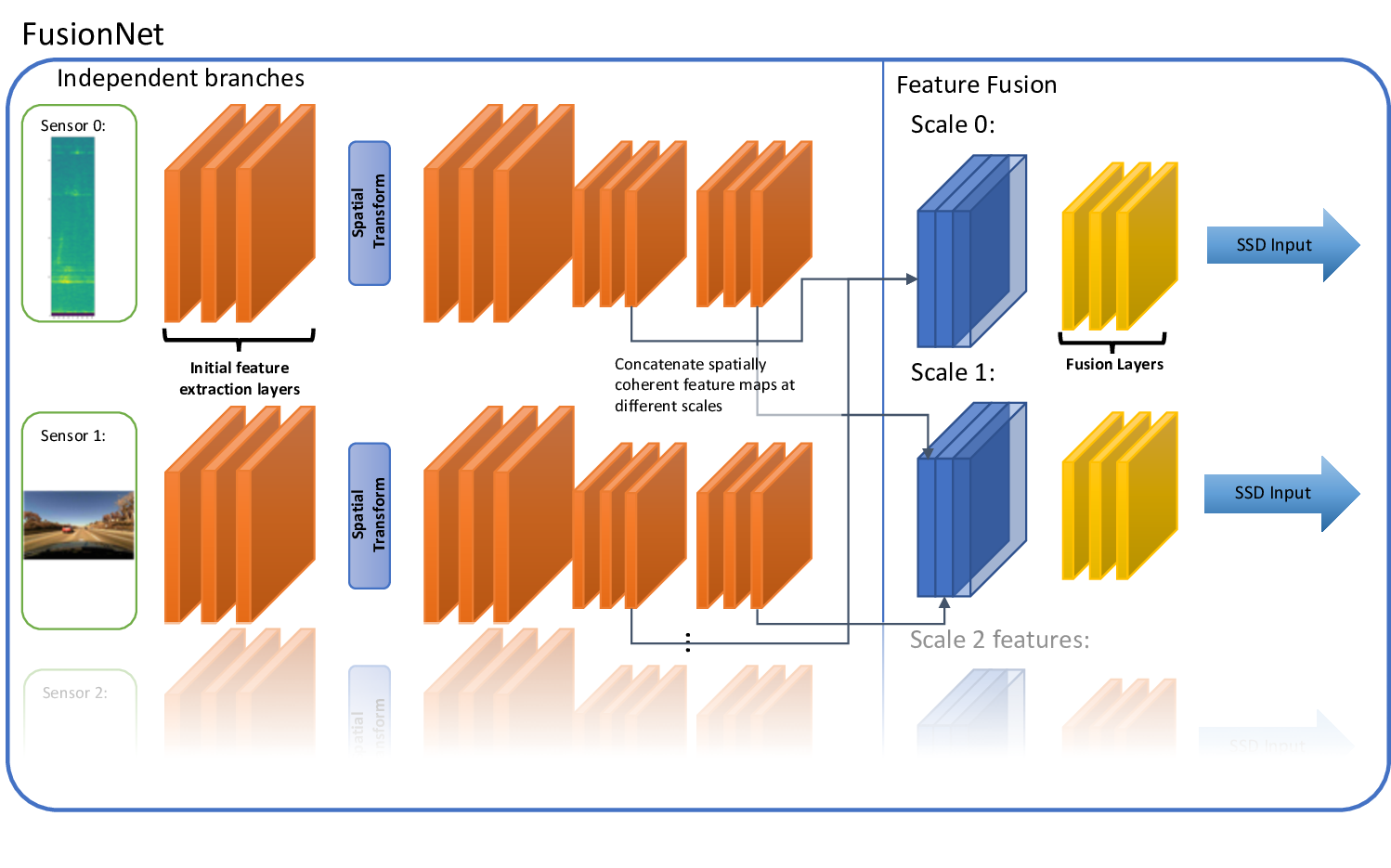

- Early fusion of camera and radar frames2020US Patent App. 16/698,601

2019

-

In NeuRIPS2019 ML4AD Workshop, 2019

In NeuRIPS2019 ML4AD Workshop, 2019

2018

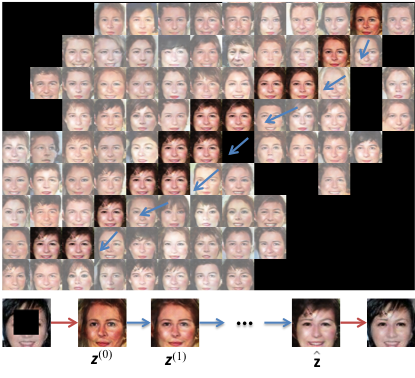

- In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018

- In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018

2017

- In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR), 2017