Radar and Camera Early Fusion for Vehicle Detection in ADAS

Teck-Yian Lim, Amin Ansari, Bence Major, Daniel Fontijne, Michael Hamilton, Radhika Gowaikar, Sundar Subramanian, Teja Sukhavasi

NeuRIPS2019 ML4AD Workshop

Abstract

The perception module is at the heart of modern Advanced Driver Assistance Systems (ADAS). To improve the quality and robustness of this module, especially in the presence of environmental noises such as varying lighting and weather conditions, fusion of sensors (mainly camera and LiDAR) has been the center of attention in the recent studies. In this paper, we focus on a relatively unexplored area which addresses the early fusion of camera and radar sensors. We feed a minimally processed radar signal to our deep learning architecture along with its corresponding camera frame to enhance the accuracy and robustness of our perception module. Our evaluation, performed on real world data, suggests that the complementary nature of radar and camera signals can be leveraged to reduce the lateral error by 15% when applied to object detection.

Paper and Poster

NeurIPS 2019 ML4AD Workshop Poster Paper

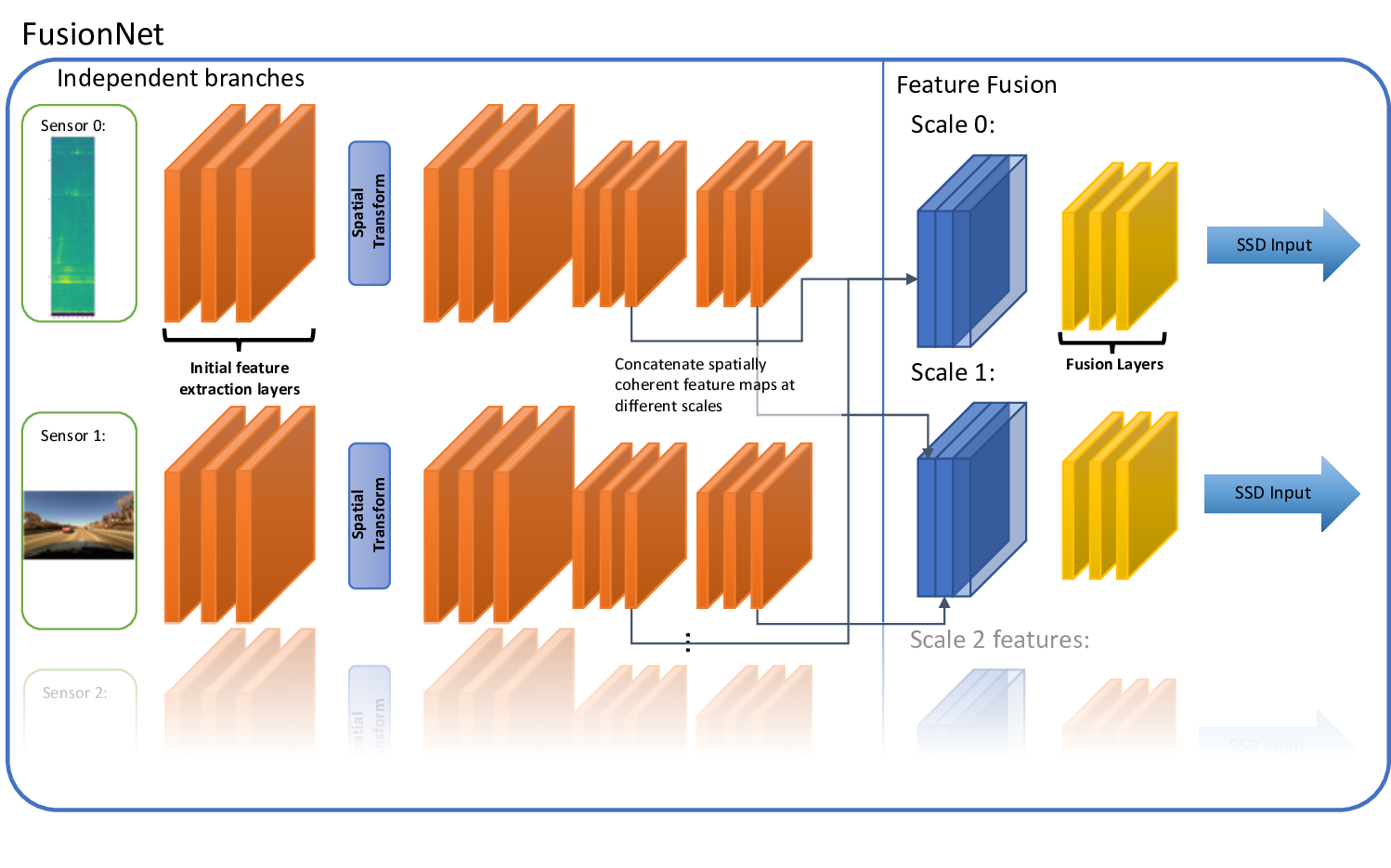

Overview

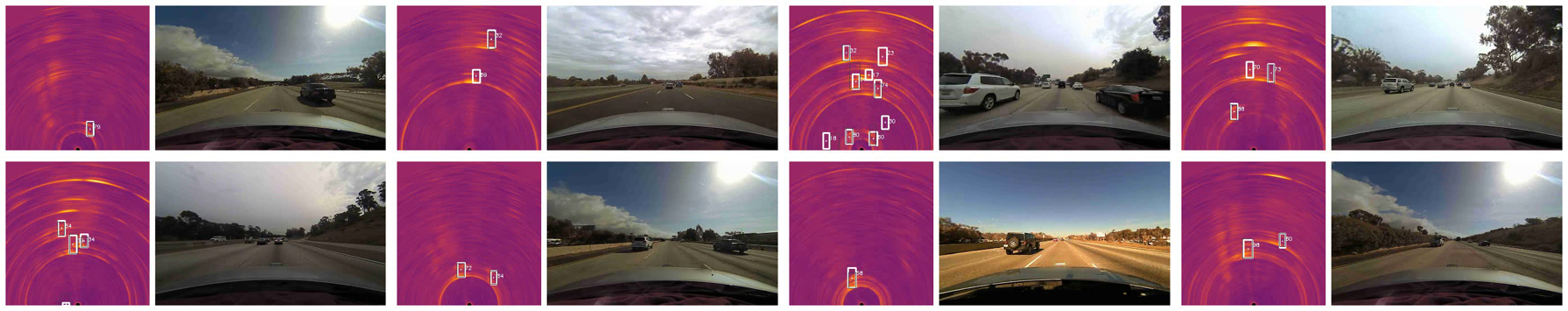

Results

White boxes are network outputs, and black boxes are ground-truths.

| Fusion(SGD) | Fusion(ADAM) | Camera Only | Radar Only | |

|---|---|---|---|---|

| mAP | 73.5% | 71.7% | 64.65% | 73.45% |

| Position x | 0.145m | 0.152m | 0.156m | 0.170m |

| Position y | 0.331m | 0.344m | 0.386m | 0.390m |

| Size x | 0.268m | 0.261m | 0.254m | 0.280m |

| Size y | 0.597m | 0.593m | 0.627m | 0.639m |

| Matches | 8695 | 8597 | 7805 | 8549 |

We observed that our FusionNet outperforms individual sensors when trained with our proposed training scheme. Improvements in mAP is marginal, but positioning and size estimated are much more significant.

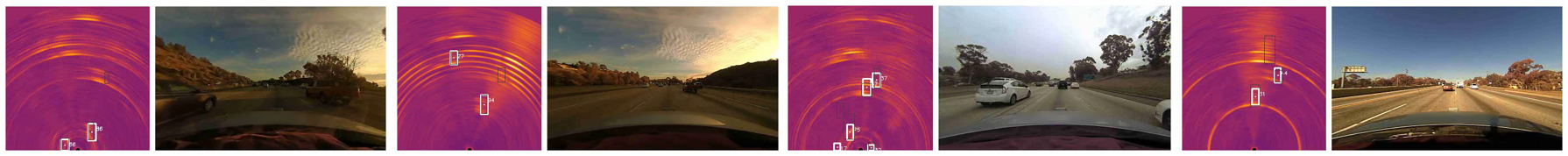

Failure cases

Ablation and sensitivity studies

| Fusion | Camera 0 | Radar 0 | Camera + Noise | Radar + Noise | |

|---|---|---|---|---|---|

| mAP | 73.5% | 55.0% | 19.4% | 61.2% | 71.9% |

| Position x | 0.1458m | 0.1883m | 0.1816m | 0.1667m | 0.1524m |

| Position y | 0.3315m | 0.4297m | 0.3602m | 0.3847m | 0.3360m |

| Size x | 0.2688m | 0.3042m | 0.3230m | 0.4126m | 0.2686m |

| Size y | 0.5975m | 0.7829m | 0.5653m | 0.7022m | 0.5853m |

| Matches | 8695 | 8259 | 3051 | 8004 | 8554 |

Without retraining, we evaluated the network with the camera image or the radar range-azimuth image set to zero (Camera 0 and Radar 0 respectively), and added Gaussian noise of mean 0 and \(\sigma=0.1\) to the normalized input camera image and radar frame. We found the that performance of the network drops significantly when either sensor was corrupted or missing.

FAQs during NeurIPS ML4AD Workshop

- There are open datasets with radar, why aren’t they evaluated?

- Our radar data is different from other methods published. Consequently, it is impossible for us to apply our method on published datasets with radar data and vice versa.

- How does the radar data in this work compare with those available in other datasets?

- In commercially packaged radar systems, data similar to our’s is processed with some form of constant false alarm rate (CFAR) detection algorithm to produce a small number of points. In contrast, we work on data before this detection algorithm, retaining far more information than the points alone provide.

- Can such radar data (dense/minimally processed) be obtained from commercially available radar systems in cars today?

- Not that we know. Commercially available radars systems include post-processing steps to return only sparse points, together with velocity. We do not know of any commercially available vehicular radar packages that make the raw ADC readings available.

- Can we apply the post-processing techniques on your data to obtain the sparse points that off-the-shelf radar packages provide?

- In theory, yes. However, the design of the radar front-end (the antenna pattern), the waveform parameters, and tuning parameters in the post-processing are not available in the product specifications. Thus while we can apply the same techniques in principle, we may not be able to reproduce the (sparse points) detection results thatcommercial systems produce without significant efforts in reverse engineering.

- Will this dataset be made available?

- We hope to be able to share this dataset, but unfortunately, we are not in control of the dataset. Thus we are currently unable to promise or commit to a timeline where we can release the dataset.

- The data bandwidth requirement in this radar seems far higher than the radars in other system implementation, is this impractical?

- The radar inputs to our proposed neural network is a \(256 \times 64\)

float32image. This is comparable to camera data. We do not think that this is an overly large requirement.

- The radar inputs to our proposed neural network is a \(256 \times 64\)

Cite

@article{radarfusion2019,

title={Radar and Camera Early Fusion for Vehicle Detection in Advanced Driver Assistance Systems},

author={Lim, Teck-Yian and Ansari, Amin and Major, Bence and Fontijne, Daniel and Hamilton, Michael and Gowaikar, Radhika and Subramanian, Sundar},

journal={ {NeurIPS} Machine Learning for Autonomous Driving Workshop},

year={2019}

}